Why Your Workstation Choice Is a Critical R&D Investment, Not an IT Expense

Let’s be real for a second. That spinning “solving…” icon isn’t just a minor annoyance—it’s project time, R&D budget, and your own sanity draining away. Thinking of your CFD machine as just another computer is a huge mistake. It’s not an IT expense; it’s fundementally your primary tool for innovation. A slow, poorly configured machine doesn’t just make you wait longer; it actively discourages you from running that one extra, more detailed simulation that could lead to a breakthrough.

This guide isn’t about just listing parts. It’s about empowering you to make smart investment decisions. If you’re just getting started with the basics of fluid simulation, our ultimate guide to Computational Fluid Dynamics is a fantastic place to begin before diving into hardware.

The Anatomy of a CFD Workflow: Matching Hardware to Simulation Stage

Most people think building a CFD rig is all about throwing as many CPU cores as possible at the problem. It’s not that simple. Your workflow has distinct stages, and each one stresses your hardware in a completely different way. Understanding this is key to not creating a bottleneck. ⚙️

Pre-Processing (Meshing): Why Single-Core Speed and RAM Matter Most

This is where you build your digital world—cleaning geometry and generating the mesh. For software like Ansys Meshing or Fluent Meshing, much of this process is single-threaded. That means one core does the heavy lifting. A CPU with a blazing fast clock speed will serve you far better here than a CPU with 32 slow cores. It’s the difference between meshing in minutes versus hours. And since that entire mesh has to live in your system’s memory, having ample RAM is non-negotiable.

Solving: The Insatiable Hunger for CPU Cores and Memory Bandwidth

Here it is—the main event. This is the marathon where your solver iterates until it converges. Now, all those CPU cores come into play. Most modern solvers (like Fluent, STAR-CCM+, or OpenFOAM) are built to run in parallel, distributing the workload across multiple cores. This is where you get that massive speed-up.

But cores are only half the story. All those cores are constantly shouting data at each other through your RAM. If your memory bandwidth is low, it’s like trying to have 16 separate conversations through a single tin can telephone. It becomes a major bottleneck. This is where server-grade CPUs with more memory channels often shine. ☕️

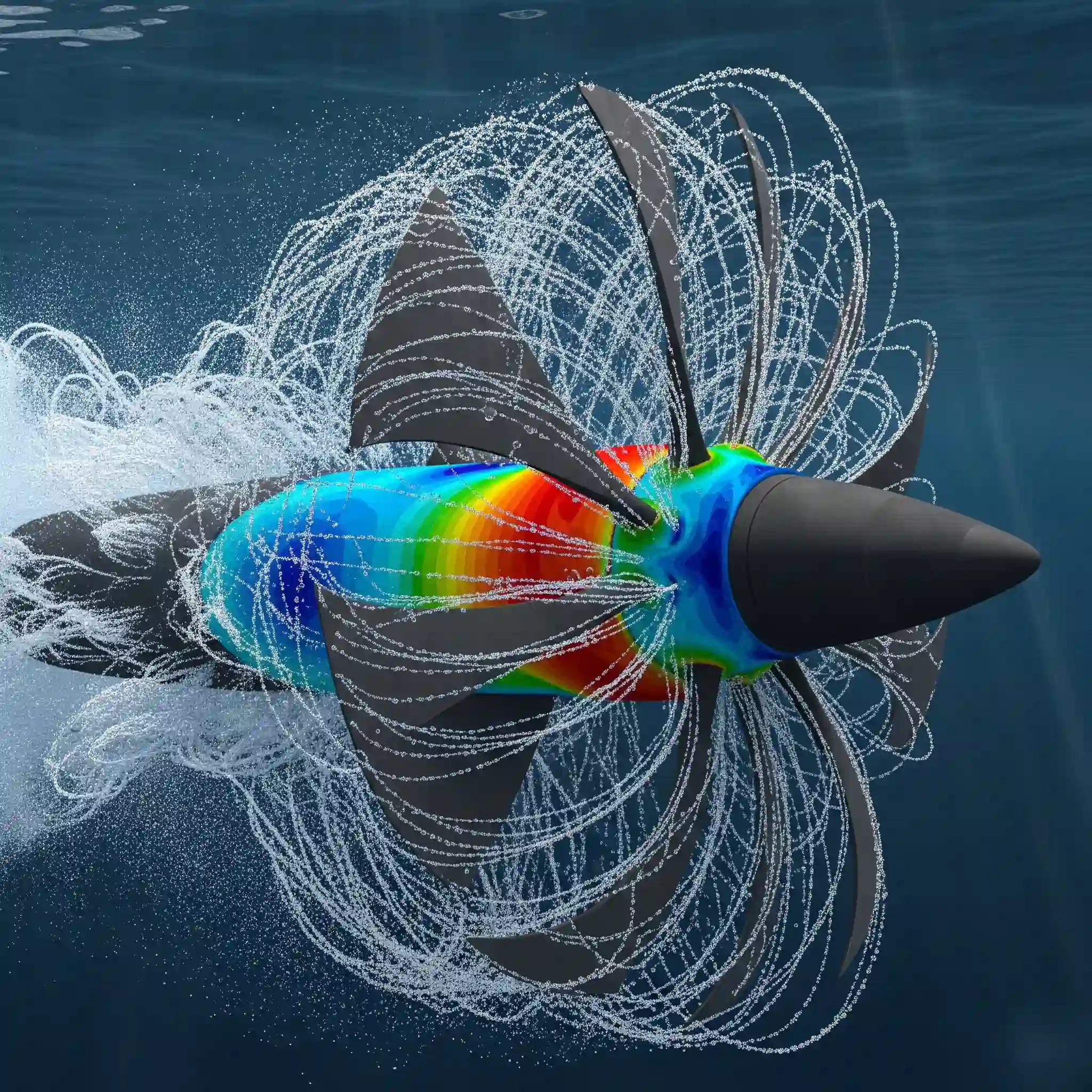

Post-Processing (Visualization): Where Your GPU Proves Its Worth

Your simulation is done. Congrats! But raw data is useless. You need to see it. Post-processing is where all those numbers turn into insightful streamlines, contour plots, and animations. Rendering complex scenes with millions of cells, creating iso-surfaces, or manipulating transient results in real-time is incredibly GPU-intensive. A weak GPU will lead to a laggy, frustrating experience, slowing down your ability to actually understand the results.

The Core Components Decoded: A CFDSource Engineer’s Breakdown

Alright, now that we know what each stage needs, let’s cut through the marketing noise and talk specifics. This is about what actually matters for your day-to-day simulations, based on what we’ve seen work—and fail spectacularly—in the real world. Getting this right is how you avoid some of the most common beginner mistakes in CFD.

CPU: The Engine of Your Solver – Cores vs. Clock Speed in Ansys Fluent & STAR-CCM+

Building the perfect CFD workstation often starts with this single, critical choice. The “more cores is always better” mantra can be a trap. I remember a project back in my early days, maybe 15 years ago, where the company invested heavily in a dual-socket server with tons of very slow cores. It looked great on paper. The problem? Our process involved a lot of single-threaded scripting and meshing. The solves were okay, but the pre-processing took days. We learned a hard lesson: the right kind of performance matters more than the raw number.

For commercial software like Ansys, there’s also the licensing cost per core to consider. There’s often a “sweet spot” around 8 to 16 high-frequency cores that gives you the best performance without sky-high licensing fees or diminshing returns. For these complex simulations, the choice of solver algorithm, like in the FVM vs FEM debate, can also influence which CPU architecture is more efficient. If your work involves extremely complex physics that standard hardware struggles with, you might need a more tailored approach, something a dedicated CFD consulting service can help spec out. 💡

Here’s a simplified breakdown to guide your choice:

| Component Feature | Better for… | Why it Matters for CFD |

| High Clock Speed | Meshing, single-threaded tasks, general system responsiveness. | Speeds up the parts of your workflow that can’t be parallelized, reducing frustrating wait times during setup. |

| High Core Count | The ‘Solving’ phase, especially for large, well-parallelized codes like Fluent or OpenFOAM. | Directly reduces the time-to-solution for the most time-consuming part of your simulation. |

| Large L3 Cache | Almost every stage of CFD. | Acts as a super-fast buffer for the CPU, keeping data close and reducing the time spent waiting for RAM. A very underrated spec! |

RAM: How Much is Enough to Handle Your Mesh? (And Why ECC is Non-Negotiable for Serious Work)

There’s a simple, back-of-the-napkin rule I’ve used for years: budget about 1-2 GB of RAM for every 1 million cells in your mesh. A 10-million-cell simulation? Aim for at least 16-32 GB of RAM. Running out of RAM is a dead end; your simulation either crashes or starts “swapping” to your SSD, slowing to an unbearable crawl. It’s better to have a little too much than not enough.

Now, let’s talk ECC (Error-Correcting Code) RAM. For any serious proffesional work, it’s not optional. Standard RAM can have random, single-bit flips caused by cosmic rays or other electrical noise. Over a 48-hour simulation with trillions of calculations, a single bit flip can corrupt your data, leading to a diverged solution or, worse, a converged solution that is subtly wrong. You won’t even know it happened until you try validating your simulation against experimental data and nothing matches. It’s a silent killer of good results.

GPU: GeForce vs. Quadro – Debunking the Myths for CFD Visualization and Solving

This is a hot debate. For 95% of CFD users, a high-end gaming card (like an NVIDIA GeForce RTX) is absolutely the better value for post-processing. It will give you a smooth, responsive experience when rotating models, creating animations, and plotting data. The specialized drivers and massive price premium of Quadro cards are often overkill unless you need specific features for other CAD software or are doing professional ray-traced rendering.

The major exception? GPU-accelerated solvers. Some CFD packages are starting to leverage GPU power for the actual solving process. If that’s your specific workflow, then the calculus changes completely, and a professional-grade card with higher double-precision performance and more VRAM might be necessary.

Storage (NVMe SSD): The Unsung Hero for Faster Load Times and Checkpointing

Don’t neglect your storage! A fast NVMe SSD is one of the biggest quality-of-life upgrades you can make. It won’t speed up your solve time, but it will dramatically reduce the time you spend waiting. Loading a 50 GB case file from a traditional hard drive can take ages; on an NVMe drive, it’s seconds.

This is especially critical for long, transient simulations where the solver writes massive checkpoint files every few hours. Writing that data to a slow drive can create a significant pause in your calculation, adding hours to your total runtime. This is a simple, relatively cheap upgrade that you’ll feel the benefit of every single day.

CFDSource Blueprints: 3 Sample Builds for Different Needs & Budgets

Theory is great, but let’s get practical. Here are three archetypes for a CFD workstation. These aren’t exact parts lists, but rather philosophies to guide your build. The best CFD software for your work might slightly alter your priorities, but these are solid starting points.

The Academic & Entry-Level Build:

- CPU: 8-12 cores with very high clock speed (e.g., AMD Ryzen 7/9, Intel Core i7/i9).

- RAM: 32-64 GB of fast, non-ECC RAM.

- GPU: Mid-to-high-end GeForce (e.g., RTX 4060/4070).

- Focus: Maximum single-threaded performance for meshing and a responsive user experience on a budget.

The Professional Engineer’s Workstation:

- CPU: 16-32 cores (e.g., AMD Threadripper, Intel Xeon W-series).

- RAM: 128-256 GB of ECC RAM.

- GPU: High-end GeForce or entry-level Quadro (e.g., RTX 4080/4090 or RTX A4000).

- Focus: A balanced workhorse for handling large industrial projects reliably, day in and day out. This is where optimizing your CFD workstation hardware truly pays dividends.

The Enterprise-Grade Powerhouse:

- CPU: Dual Socket with 64+ total cores (e.g., dual AMD EPYC or Intel Xeon Scalable).

- RAM: 512 GB – 1 TB+ of ECC RAM.

- GPU: High-end Quadro (e.g., RTX A6000).

- Focus: For running massive, mission-critical simulations that might take weeks to solve. This is for the top 0.1% of use cases where time-to-market is everything.

Beyond the Box: Costly Mistakes We’ve Seen Our Clients Make

Here are a few painful (and expensive) lessons we’ve learned over the years. Avoid these at all costs.

- Mistake #1: Overspending on a Gaming GPU While Skimping on RAM

Your RTX 4090 is useless if your simulation crashes because you only bought 32GB of RAM. Always prioritize your core components (CPU and RAM) before splurging on the graphics card. - Mistake #2: Ignoring Memory Channels and Bottlenecking a Powerful CPU

Buying a fancy Threadripper CPU that supports quad-channel memory and then only installing two sticks of RAM is like buying a Ferrari and only putting two tires on it. You’re crippling your memory bandwidth and starving your CPU cores of the data they need. - Mistake #3: Underestimating Cooling and Losing Performance to Thermal Throttling

A CPU running at 95°C will automatically slow itself down to avoid damage. A high-end air cooler or an AIO liquid cooler isn’t a luxury; it’s a requirement to ensure you’re getting the performance you paid for. Don’t let your investment melt away. 🥵

When Cloud and HPC Make More Sense

Building a local workstation isn’t always the answer. If you only have occasional need for massive computational power or your simulation size varies wildly, cloud computing or a High-Performance Computing (HPC) cluster can be more cost-effective. You pay for what you use and can scale up to thousands of cores for a single job—something a physical workstation could never do. Deciding on this strategy is a key part of the process, often where seeking advice from a fluid dynamics consultant can save you significant time and money in the long run.

Conclusion: Your Hardware Is the Foundation, Your Expertise Is the Structure

At the end of the day, the hardware is just a tool. A very powerful, very important tool, but a tool nonetheless. A great engineer can get decent results from mediocre hardware, but even the best hardware can’t save a poorly set-up simulation. The goal is to build a machine that gets out of your way and lets you focus on the engineering. Hopefully, this guide has given you the confidence to start building the perfect CFD workstation for your specific, important work.